AWS Multi-Account Strategy: Part 2

In Part 1 of the series, I discussed the drivers and solution for a secure, cost-efficient cloud architecture for a small system. In Part 2, I dive into WebomateS cloud's networking. I look at the details of the cross-account VPC peering, subnetting design and network segmentation.

Motivation

We had these goals in mind in the networking design:

- Security

- Isolate dev, qa and prod traffic

- Segment public Internet facing services from private services

- Enable exceptions where needed

- Allow for expansion

- Enable resilient (redundant) systems

Network segmentation is a foundational aspect of data center as well as cloud security strategy. It's also vital that your network be designed from the ground-up, even if you aren't ready yet, for growth and for resilience (multi-Availability Zone (AZ) and multi-region).

And as always, our constraints were:

- Limit additional cloud provider costs

- Limit operational overhead

Before we get into subnetting, let's look at VPC Sharing and especially the exceptions.

Secure VPC Peering

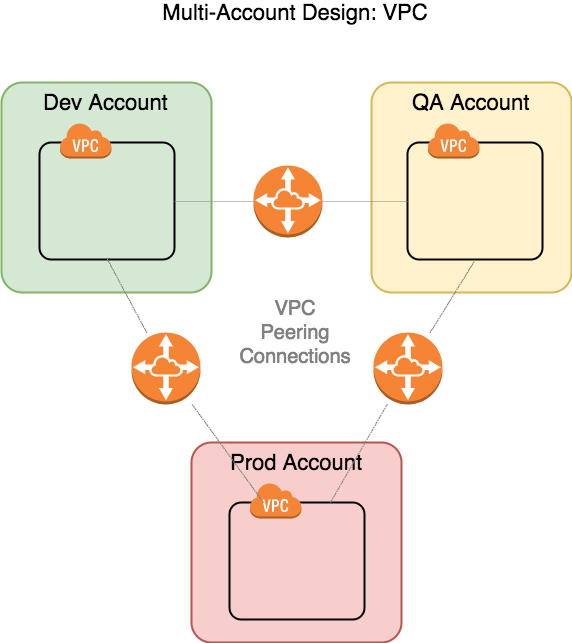

VPC peering requires a one-to-one connection, so the three accounts requires three peering connections.

Setting up a peering connection is a snap. The request is initiated from one account to another; it doesn't matter which one because it's bi-directional. It requires route tables to be set up to work properly. More importantly unique address ranges in each VPC.

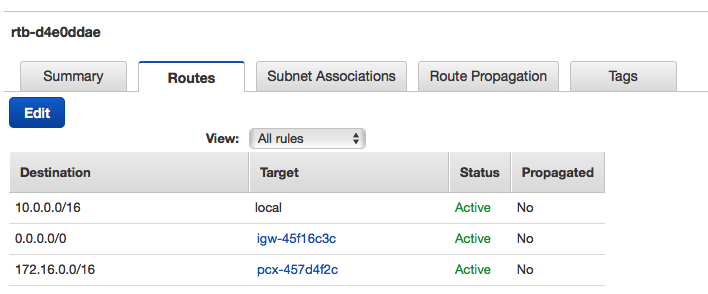

The dev account range is 10.0.0.0/16 and prod is 172.16.0.0/16. Here's the route table for Dev:

The first route keeps all local traffic local; the third rule routes the prod range to the peering connection; the second sends everything else to the Internet Gateway.

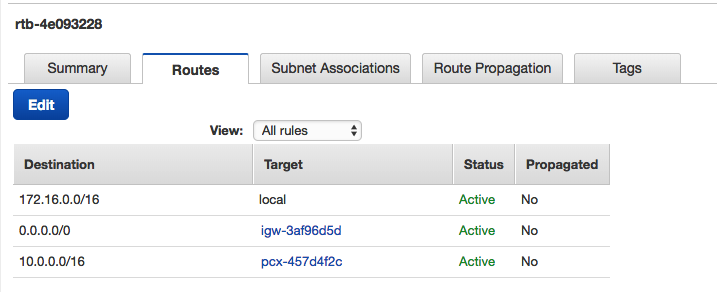

Here's the flipside in Prod:

So why do we want to peer VPCs if the whole point here of the account split is isolation?

You may recall from Part 1 that IAM user, AMI and ECR image sharing requires no network links. We don't want to allow normal traffic flow, but we do have these exceptions:

- Deploy built artifacts from a centralized build server

- Save infrastructure costs and have one service instance, e.g. a data store like RDS, serve multiple environments

- Push data periodically from one account to another, e.g. to refresh a dev database from prod on a weekly basis

Implementing Exceptions

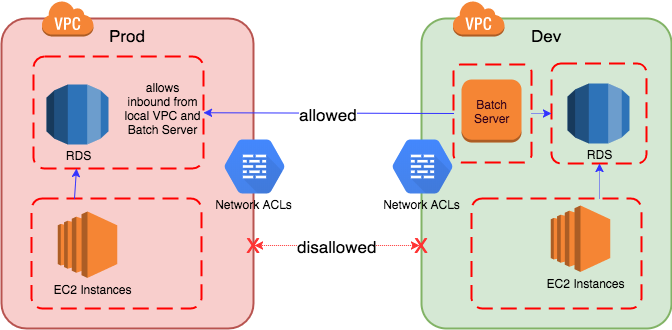

Let's look at this last example in more depth. We want to one way 'pinhole' MySQL traffic from dev to prod for specific IPs, not open it up wide, wily nily.

In a Data Center environment, thinking defense in depth, we can address this at multiple levels:

- Network borders

- Router/Switch-based Access Control Lists (ACLs)

- Border Gateway ACLs

- Network Firewalls

- Proxies, e.g. HTTP Forward proxies

- Route Tables

- Router and Border Gateway based rules

- Host

- Host-based Firewall

But in a public cloud provider such as AWS, the network is abstracted and we have different mechanisms to work with: VPC Network ACLs and Security Groups. WebomateS doesn't use host-based firewalls; the operational overhead is high and Security Groups covers that function fairly well. We use HTTP reverse proxies and intend to use forward proxies in the architecture for a different reason; I'll cover this later. Here's a view of this limited interaction:

Network ACLs and Security Groups work very differently but we use them both to layer defenses. (By keeping Network ACLs strict and modification permissions very limited, we protect the homebase, even as we allow developers to manage Security Groups in lower environments).

Network ACLs

A Network ACL is applied at the subnet level, is stateless, has ordered rules and can allow or deny traffic. Stateless means you need to have both inbound and outbound symmetric rules to allow a request in and a response out (and vice versa).

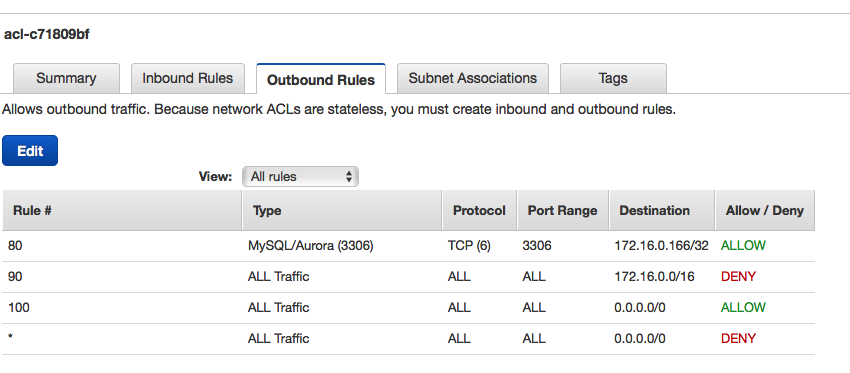

The target RDS instance is on 172.16.0.166. The source, a batch-oriented server that runs the weekly job, is on 10.0.22.238. The Outbound ACL on Dev looks like this:

Inbound looks identical. Rules are applied in the order listed. Rule 80 enables egress of destination port 3306 to 172.16.0.166. Rule 90 disables egress of any other traffic to that prod VPC address range. 100 enables outbound to the Internet.

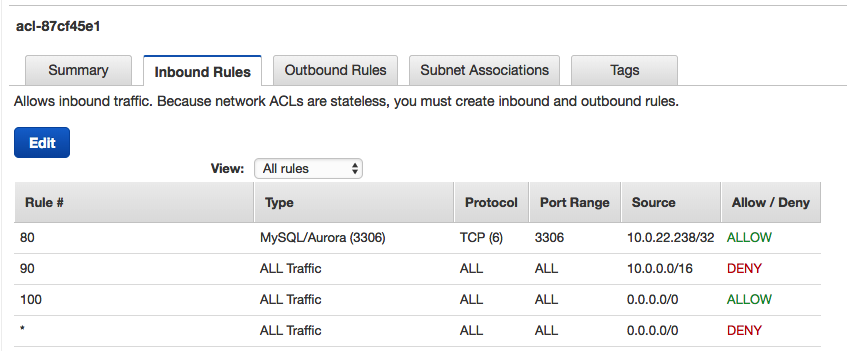

Here's the flipside ACL in Prod:

Rule 80 enables ingress from a specific IP, while 90 blocks from all others in the Dev VPC.

(Note: You'll need another ACL rule to enable access on a failover to the secondary RDS instance in another AZ. Or you could ignore this as it's unlikely to occur during the job.)

Security Groups

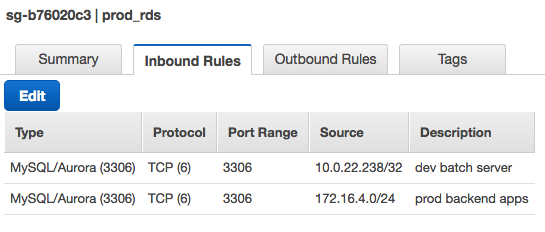

Security Groups only allow traffic in or out, cannot block traffic by IP address range, and are stateful. Stateful implies asymmetry: we need either inbound or outbound, not both. We only need inbound rules for the RDS Security Group:

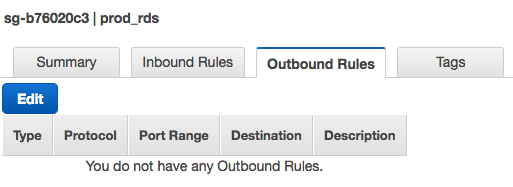

Nothing needed on Outbound:

The flipside is an Outbound rule on the batch server's Security Group allowing egress on port 3306 to IP 172.16.0.166/32.

Now that we've covered cross-account restrictions, let's look at intra-VPC restrictions and subnetting.

Network Design

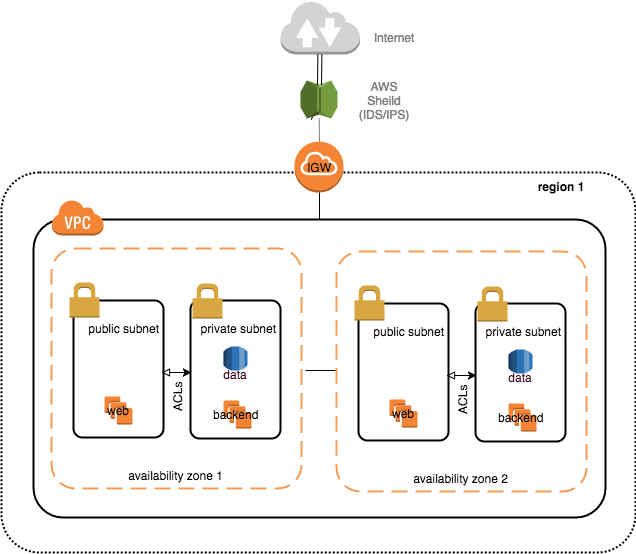

A best practice in networking is to separate your Internet facing hosts from your backend services, applications and data stores. This segmentation both limits the attack surface and contains a breakout.

Internet facing hosts are those serving inbound requests like load balancers, web servers, reverse proxies, email servers and bastion hosts (e.g. SSH & RDP jumpboxes). The Internet-facing subnet is often called a De-militarized Zone (DMZ); Amazon calls it a public subnet. Amazon calls the non-public subnet a private subnet. You add Network ACLs and Security Groups (and host-based firewalls) to limit the traffic to the backend.

Outbound traffic is a bit trickier to manage. Note that by defaulin AWSt:

- All subnets are default associated to the Internet Gateway

- The default Security Group Outbound rule, present in all new Security Groups, is all ports allowed to to 0.0.0.0/0

It sure is convenient to leave the default Internet access. At will, you can download the latest Linux packages, anti-virus definitions, docker hub repos, etc., as well as talk to various AWS services, including the ECS manager. Or maybe regular access is needed to Github, Facebook, GMail, etc. The critical risk here is that software could accidentally or intentionally egress sensitive data, and malevolent software could launch attacks outbound (e.g. DDOS).

Here are the options we've considered:

- Leave access open and hope for the best - riskiest but cheapest

- Leave access open and regularly audit egress through VPC flow logs - high operational overhead

- Leave access open and automate alerts on VPC flow logs - development effort, operational overhead to maintain allowed subdomain lists

- Add IP-based allows in Security Groups - This at a minimum operationally challenging when partner IPs change and at most infeasible with large Internet subdomains that front a dynamic cluster

- Proxy access through a forward proxy like Squid for HTTP - Lower operational overhead with easier to manage subdomain-based rules and some dev effort to migrate set all app access to use proxies

Tough choices, right? Let me know if you've got a better strategy.

Back to segmentation ... we put together a template for the VPC and just copied it to the other accounts:

As with VPC peering exceptions, Network ACLs and Security Groups are employed to control flow in and out of the subnets, with the private much more restricted. Ideally you have secure (TLS, SSH, IPSec) inbound and outbound to both public and private. However this does present many challenges with secrets management, logging and triage, and additional compute needs and latency. In this system, the backend communication is plain text. A typical inbound is HTTPS (443) to public, HTTP (8080) to private, and from private to data store MySQL (3306).

Subnetting

This seeems future proof. We can expand without rebuilding. If we want to further segment private subnets into backends and data stores, we can do that. If we want to segment the public into say reverse proxies, forward proxies and load balancers, we can do that. If we expand to other regions, we duplicate the VPC in the same accounts.

The template uses the following subnet ranges:

- VPC: /16 or 65,536 addresses (65,531 usable)

- AZ: /20 or 4,096 addresses (4,091 usable)

- Subnet: /24 or 256 addresses (251 usable)

One other note: we used 10.x.x.x in lower environments and 172.x.x.x in prod. This gives all users a clue to be extra careful when dealing with 172.x.x.x addresses.

Costs

All network and related security constructs are free in AWS, though there are Premium versions of Sheild and Trusted Advisor.

Inbound data into any network or service is generally free, whereas transfer out is charged, generally at $.01/GB. Many AWS managed services allow free transfer out to EC2 instances (virtual machines) within the same region using private IPs. So you'll want to keep data flowing within the VPC and not back out to the Internet and in again, not only for cost reasons but also security. Inter-region as well as inter-AZ transfer out is charged, so minimize this as well in your architecture.

Summary

Establishing a VPC network template enabled us to achieve the greatly improve security through segmentation, smartly implement exceptions, and contain cloud provider and operational costs. The template allows for further expansion for growth (more compute) and resilience (replicas) as the business grows.

Published Jan 16, 2018

Image ‘Earth Orbit Night Lights’ by NASA, CC0 1.0 license

Comments? email me - mark at sawers dot com